Hadoop is now a preeminent framework in the field of big data processing. It has changed the game for many different businesses thanks to its capacity to store, process, and analyse enormous volumes of data. Understanding Hadoop's architecture is essential to understanding how it functions. This article seeks to give a thorough explanation of the Hadoop architecture, covering its elements, their relationships, and the function they serve in handling large amounts of data.

Hadoop architecture overview

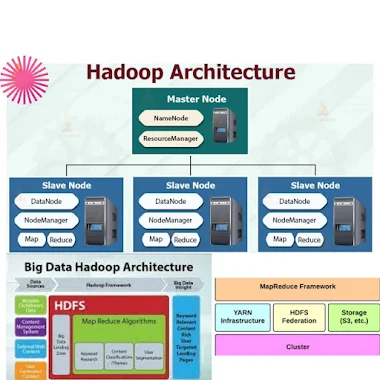

hadoop architecture consists of four key components: hadoop distributed file system (hdfs), yarn (yet another resource negotiator), mapreduce, and hadoop common. Let's delve into each of these components to gain a comprehensive understanding of hadoop architecture.

Hadoop distributed file system (hdfs)

hdfs is the storage layer of hadoop. It is designed to handle large volumes of data by distributing it across multiple commodity hardware nodes. The architecture of hdfs follows a master-slave model. The key elements of hdfs are:

A) namenode: it serves as the master node and manages the file system's namespace and metadata. The namenode keeps track of the file locations, permissions, and other important details.

B) datanode: these are slave nodes that store the actual data. Datanodes are responsible for reading and writing data upon client requests.

The communication between the namenode and datanodes is essential for data storage, replication, and fault tolerance.

People also searching for

Yarn (yet another resource negotiator)

yarn acts as the resource management layer of hadoop architecture. It is responsible for managing and allocating resources to applications running on the hadoop cluster. Yarn consists of two key components:

a) resourcemanager: the resourcemanager oversees the allocation of resources across various applications. It keeps track of available resources and manages the allocation of containers to running applications.

B) nodemanager: nodemanagers run on each individual node and are responsible for managing the resources of that node. They monitor resource usage and report back to the resourcemanager.

Mapreduce

mapreduce is the processing layer of hadoop architecture. It provides a programming model for processing large data sets in parallel across a cluster. The mapreduce framework divides a job into multiple tasks, which are then distributed across the nodes in the cluster. The key components of mapreduce are:

A) mapper: the mapper processes input data and generates intermediate key-value pairs.

B) reducer: the reducer receives the intermediate key-value pairs produced by the mapper and performs aggregation or summarization operations.

C) jobtracker: the jobtracker is responsible for coordinating the mapreduce jobs by assigning tasks to available tasktrackers.

D) tasktracker: tasktrackers run on individual nodes and execute the tasks assigned to them by the jobtracker.

Hadoop common

hadoop common comprises the libraries and utilities required by other hadoop components. It includes various modules, such as hadoop authentication, hadoop ipc (inter-process communication), and hadoop security.

Understanding the interactions

the interactions between these components are crucial for the seamless functioning of hadoop architecture.

Here's a simplified overview of how the components interact:

a) the client submits a job to the hadoop cluster.

B) the jobtracker receives the job and splits it into tasks.

C) the resourcemanager allocates resources to the tasks and assigns them to the tasktrackers.

D) the tasktrackers execute the tasks and produce intermediate results.

E) the reducers aggregate the intermediate results and generate the final output.

2. Data storage flow:

A) the client interacts with the namenode to read or write data.

B) the namenode provides the client with the location of the

data on the datanodes.

C) the client communicates directly with the relevant datanodes to read or write the data.

hadoop architecture is a powerful framework that enables the storage, processing, and analysis of big data. With its components, including hdfs, yarn, mapreduce, and hadoop common, hadoop architecture provides a scalable and fault-tolerant solution for handling large-scale data processing tasks. By understanding the interactions between these components, organizations can leverage the full potential of hadoop to gain valuable insights from their data.

Remember, if you want to delve deeper into hadoop architecture, there are various resources available, such as hadoop architecture diagrams, powerpoint presentations (ppt), and pdf documents, that can provide a more detailed understanding of this powerful framework.

FAQ

What are the 4 main components of the Hadoop architecture?

- HDFS: The Hadoop Distributed File System (HDFS) is a distributed file system that stores data in a reliable and fault-tolerant manner. It is designed to be scalable and fault-tolerant, and it can be used to store large amounts of data.

- MapReduce: MapReduce is a programming model that allows for the parallel processing of large datasets. It is designed to be easy to use and scalable, and it can be used to process a variety of data types.

- YARN: Yet Another Resource Negotiator (YARN) is the resource management layer of Hadoop. It manages the resources of the Hadoop cluster, such as CPU, memory, and storage. It is designed to be scalable and flexible, and it can be used to run a variety of applications.

- Common: The Hadoop Common utilities are a set of libraries and utilities that are used by the other Hadoop components. They provide common functionality, such as data serialization and compression.

What is HDFS and MapReduce architecture in Hadoop?

HDFS and MapReduce are the two main components of the Hadoop architecture. HDFS is the storage layer, and MapReduce is the processing layer.

HDFS stores data in a distributed manner across a cluster of nodes. This allows for scalability and fault-tolerance. MapReduce is a programming model that allows for the parallel processing of large datasets. This allows for the efficient processing of large datasets.

What is the GFS architecture in Hadoop?

The GFS architecture in Hadoop is a deprecated architecture that was used to store large datasets. It was replaced by HDFS in Hadoop 2.0.

GFS was a scalable and fault-tolerant file system that was designed to store large datasets. It was used by Google for a variety of applications, such as search and machine learning.

HDFS is a more scalable and fault-tolerant file system than GFS. It is also easier to use and manage. As a result, HDFS has replaced GFS as the main storage layer for Hadoop.

What are the 2 main features of Hadoop?

The two main features of Hadoop are:

- Scalability: Hadoop is designed to be scalable, meaning that it can be easily scaled up or down to meet the needs of the application.

- Fault tolerance: Hadoop is designed to be fault-tolerant, meaning that it can continue to operate even if some of the nodes in the cluster fail.

What are the 3 main parts of the Hadoop infrastructure?

The three main parts of the Hadoop infrastructure are:

- The Hadoop Distributed File System (HDFS): HDFS is the storage layer of Hadoop. It stores data in a distributed manner across a cluster of nodes.

- The MapReduce programming model: MapReduce is a programming model that allows for the parallel processing of large datasets.

- The YARN resource management framework: YARN is the resource management layer of Hadoop. It manages the resources of the Hadoop cluster, such as CPU, memory, and storage.

What are the 5 pillars of Hadoop?

The five pillars of Hadoop are:

- Scalability: Hadoop is designed to be scalable, meaning that it can be easily scaled up or down to meet the needs of the application.

- Fault tolerance: Hadoop is designed to be fault-tolerant, meaning that it can continue to operate even if some of the nodes in the cluster fail.

- Availability: Hadoop is designed to be available, meaning that it can be accessed by users even if some of the nodes in the cluster are unavailable.

- Cost-effectiveness: Hadoop is designed to be cost-effective, meaning that it can be used to store and process large datasets without breaking the bank.

- Ease of use: Hadoop is designed to be easy to use, meaning that it

Post a Comment

image video quote pre code